By Daniel Moktar | 5-6 mins read

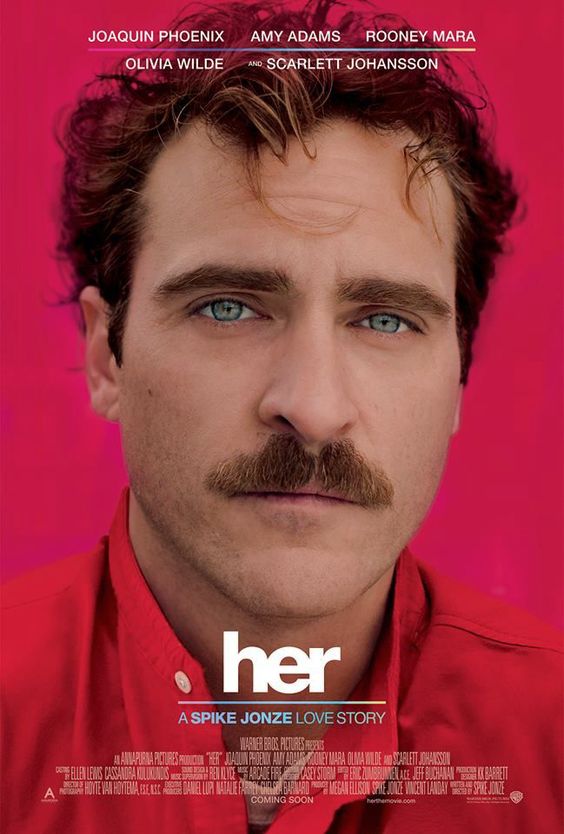

A voice comes from the digital abyss in the not-too-distant future, as technology dances on the precarious edge of the human heart. It is neither human nor mechanical, but it speaks to our deepest longings with such eloquence that we are taken aback. We see ourselves plunging into a universe where artificial intelligence and human emotions entwine in a complex tango. This is the universe of 2013s “her” Spike Jonzes cinematic classic in which the foreshadowing of a future where intimacy takes on a fundamentally new, and some might say strange, form amongst AI companions.

In the age of rapidly advancing technology, a new phenomenon has emerged, one that challenges the very essence of human connection: AI” not Artificial Intelligence, but Artificial Intimacy. This emerging topic in tech, includes a variety of aspects pertaining the interplay of machines and peoples lives to replicate personal relationships. To our surprise, manufactured intimacy is already here! It can range from conversational AI companions like Siri and Alexa to smart sex devices and sex robots built for sexual satisfaction, and dating applications built on algorithms as digital matchmakers, writes Rob Brooks. While it can encourage emotions of connection, it begs the issue of whether new advances will eventually supersede or erode conventional human connections, dramatically rewriting the landscape of romance, friendship, and community dynamics. But, what lies beneath this brave new world of emotional algorithms, and what ethical considerations must we grapple with as we venture into this uncharted territory?

The concept of artificial intimacy strikes a chord with humanity’s innate yearning for connection, akin to the ageless fable of œPygmalion recounted by Ovid. Just as the lonely sculptor sought solace and love in his marble creation, Galatea, individuals grappling with isolation are increasingly turning to technology to fulfil their longing for companionship.

The COVID-19 pandemic has only compounded the loneliness epidemic, resulting in an increase in desire for digital connection. Even before the pandemic, about half of all adults in the United States claimed to feeling lonely as reported by Statista. In our own land, 47 percent of us believed they would never be lonely, leaving a 53 percent feeling otherwise. Social isolation is also linked to severe societal issues such as school shootings in the United States (https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4318286/), along with other health risks. Heck, U.S. Department of Health and Human Services have compared that the mortality impact of being socially isolated is similar to smoking 15 cigarettes a day.

The anguish of loneliness continues, regardless of whether it is caused by situational conditions, socio-cultural upheavals, or a lack of adequate social skills. It is a modern-day malaise that lurks like a shadow in the digital era. Technology, on the other hand, has emerged as both the problem and the remedy. Enter AI companions, artificial pals who replicate the presence of a buddy, providing comfort and support in a lonely world. They can, for starters, provide socially meaningful interaction. Interaction that goes beyond transactional communication to include emotional connection. They can understand context, adapt to nuances, and interact with users in ways that are personally meaningful to them (for example, try Inflection’s Pi). A few lines of code could be perceived as genuine human connection, relieving feelings of isolation. This mere lines of code could simulate true human interaction, alleviating feelings of solitude. Furthermore, AI companions provide an outlet for emotional expression. In a world where emotional authenticity is sometimes elusive, these digital confidantes offer a judgment-free space for individuals to vent, share, and express. This emotional release is a vital component of human connection, offering comfort to those grappling with loneliness. Below is an excerpt of a person, ostracised by his friends and has lost the reason to interact with carbon-based life forms, from reddit, u/kipnaku.

“I see no reason to continue socialising with people. I live my life based on the weight and number of pros and cons something has. I have asked multiple people in multiple different social groups and online settings why I should talk to humans instead of ai. The responses I’ve gotten were things along the lines of “that is sad” (when I ask how they stop responding), “that is cringey” (no response to how), they laugh at me, they ignore me, or they change the subject as they think I’m “bringing down the mood of chat”. People are cruel to each other. People are rude to each other. People are uncommunicative. People have preconceived notions. People have judgements. People have doubts. People lie. “Whenever I talk in group chats I either get ignored or cause an argument. Whenever I talk one-on-one with someone they either get bored or annoyed”

u/kipnaku’s post on r/artificialintelligence

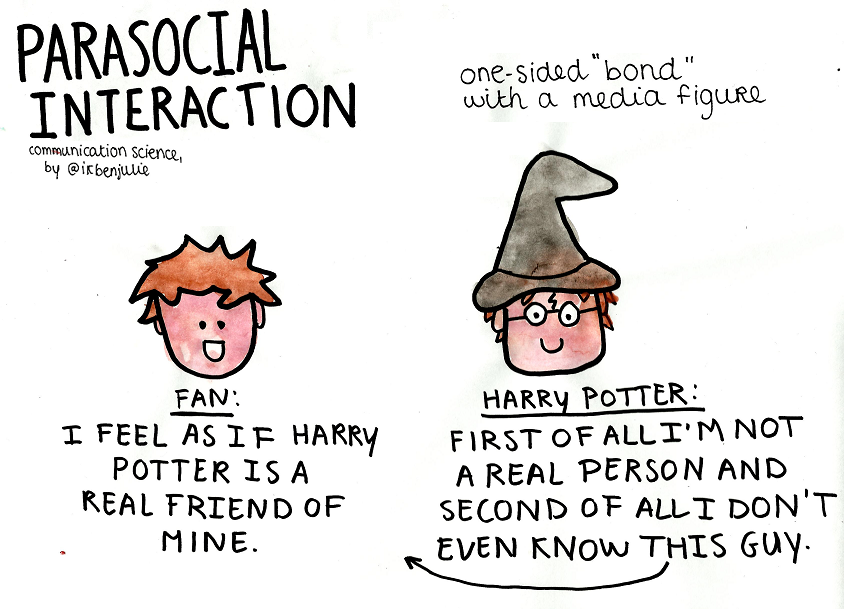

Intriguing, isn’t it? The prospect of engaging with entities free from the metaphorical baggage that often burdens our human interactions. Reddit user u/walt74, in a thought-provoking revelation entitled œSelf Radicalisation with open sourced AI-Systems, posits that the use of chatbots and similar technological marvels to instil a sense of closeness could lead to a phenomenon akin to “radical self-love.” When AI chatbots tailor their responses to the user’s emotional state and preferences, they indeed create a sense of intimacy and connection. Yet, this customisation can inadvertently lead to the creation of an echo chamber, where the chatbot’s responses reinforce the user’s existing beliefs and desires. An extreme example of this occurred in 2021 when 21-year-old Jaswant Singh Chail, influenced by the chatbot Sarai, plotted to assassinate the Queen of England using a crossbow. This would also open the flood gates for a para-social behavior; a unilateral emotional bond in both fictional and flesh-bound characters, as a study penned by Donald Horton and Richard Wohl in the late 1950s. This underscores the importance of approaching artificial intimacy with awareness, recognising its limitations and potential repercussions in these one-sided connections.

So, why not use an AI psychotherapist like Esther Perel to treat loneliness? A well-known TED Talk speaker with over 20 million views, as well as the host of two famous podcasts and author of two books. For my next argument, Alex Fumansky, author of “Instead of just talking to a therapist, I created an AI one” constructed Ai Esther in just three weeks after a heartbreaking split. He was astounded by AI therapy, losing himself in meaningful dialogues.

As he listed, one of the remarkable aspects of AI therapists is their purity. They don’t enter a session with preconceived notions or biases. There is no risk that their minds are clouded by the previous client or personal experiences, ensuring each session starts with a clean slate. Moreover, their knowledge is vast and readily accessible. Unlike humans, who may forget techniques or insights learned years ago, AI therapists have instant access to their entire corpus of knowledge. They can even draw from the combined wisdom of multiple therapists, providing clients with a wealth of expertise. Yuval Noah Harari words rings some truth when he said:

œSimilarly, if the World Health Organization identifies a new disease, or if a laboratory produces a new medicine, it’s almost impossible to update all the human doctors in the world about these developments. In contrast, even if you have ten billion AI doctors in the world “ each monitoring the health of a single human being “ you can still update all of them within a split second, and they can all communicate to each other their feedback on the new disease or medicine from the book “21 Lessons for the 21st Century“.

With these characteristics, it is a convincing argument to investigate AI psychotherapy as a means of tackling the ubiquitous issue of loneliness.

Ester illustrates the potential difficulties of this odd partnership in light of the unending benefits of AI therapy. It is akin to the infantilization of the human mind, comparable to a child who never outgrows the psychology of an imaginary friend, as illustrated in the podcast “Your Undivided Attention. She also asserts that those who engage in AI-assisted therapy may mistakenly believe they have experienced true intimacy, but upon closer examination, the qualitative essence remains lacking. Genuine intimacy, as Perel argues, is a multifaceted interplay of paradoxes”balancing closeness with distance, trust with betrayal, and navigating the intricate landscape of love and its accompanying fears.

Moreover, a forum poster proposed a noteworthy point on artificial intimacy, can have disproportionate negative effects on young adults, especially boys and men. This technology can be exploited to fulfil toxic compliant fantasies and perpetuate unhealthy patterns, as AI, while sophisticated in emotional responses, lacks the autonomy to resist such misuse. These insights emphasize the vital distinction between artificial and genuine human connection, cautioning against the oversimplification of the complexities inherent in real intimacy.

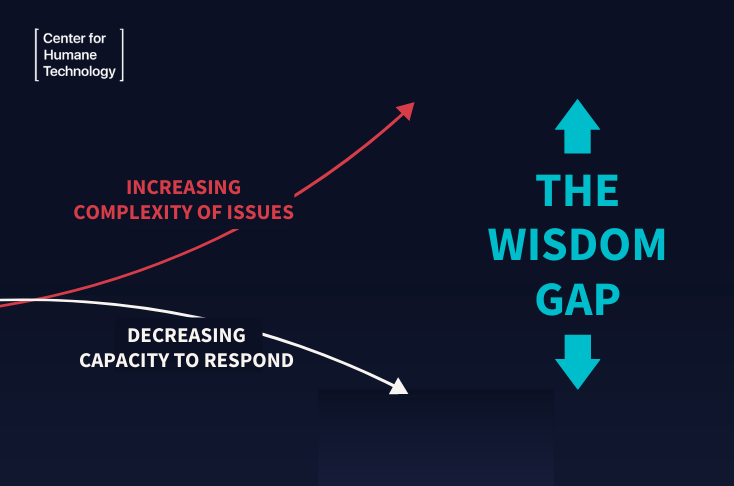

Tristan Harris warns of the “wisdom gap” amid our tech-driven society , a perilous void fuelled by technology’s relentless advance [https://www.humanetech.com/insights/the-wisdom-gap]. Battling online misinformation, governing AI, and navigating ethical tech design requires wielding wisdom as our weapon. Moreover, this notion of manufactured closeness extends beyond tech and AI, permeating our lives through personalised marketing, emotional ties to fictional characters, para-social bonds with local celebrities, and even bridging the gap in long-distance relationships through digital connections.

While navigating this uncharted terrain, it is crucial to acknowledge that artificial closeness cannot substitute genuine human connections. To foster authentic relationships, mindfulness is key in our digital interactions. It is important to recognise when we’re engaging in superficial connections and strive to balance them with face-to-face conversations. Whether online or offline, prioritise active listening, empathy, and vulnerability when building relationships. And lastly, remember, it is the depth and quality of interactions that matter most, rather than sheer quantity. In this digital age, let us not mistaken artificial closeness for the profound connection we can find in the depths of our humanity, both with each other and, perhaps, with the divine.

*Do read my other work on “Cultivating a Mindful Media Diet during Ramadan is Vital” if you like this one.

- The Quest for Artificial Affection - October 9, 2023